Face Controller

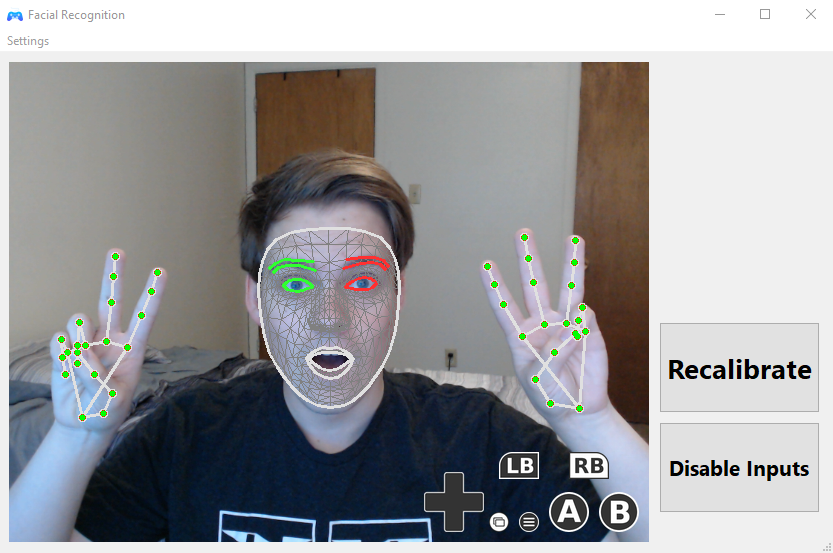

This project introduces an innovative human-computer interaction system that translates facial expressions and hand gestures into keyboard inputs. Using Google's MediaPipe for real-time eyebrow movement analysis (via facial landmarks) and hand gesture recognition (via hand landmarks), the application allows users to trigger customizable key presses. A user-friendly GUI, built with Python's Tkinter library, facilitates calibration, personalization, and operational control.

Features

- Eyebrow movement triggers different configurable key presses.

- Hand gestures trigger different configurable key presses.

- User-friendly GUI built with Python Tkinter.

- Calibration mode for personalized gesture recognition.

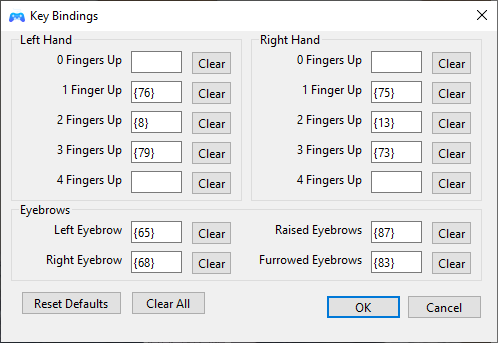

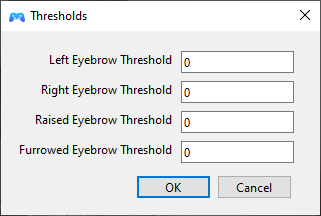

- Adjustable sensitivity thresholds for detection.

- Customizable mapping of gestures to specific key presses.

- Live camera feed displayed within the GUI.

- Real-time visualization of facial and hand landmarks.

- On-screen display of detected gestures and corresponding key outputs.

Gallery

Technical Details

The system utilizes Google's MediaPipe library to perform real-time analysis of a user's face and hands via a webcam. The Facial Landmark module detects and tracks key points on the face, enabling the interpretation of eyebrow movements (e.g., vertical displacement of eyebrow landmarks). Similarly, the Hand Landmark module identifies finger configurations. These detected movements are then compared against user-defined thresholds. If a movement surpasses its threshold, the system programmatically triggers a pre-assigned key press. The entire application is wrapped in a Python Tkinter GUI, which handles user inputs for calibration (setting baseline expressions/gestures), threshold adjustments for sensitivity, key binding customization, and provides visual feedback including the camera stream, landmark overlays, and activated key presses.